NTU and Shanghai AI Lab Open-Source PhysX‑Anything: Generate Simulation‑Ready 3D Physical Assets from a Single Image

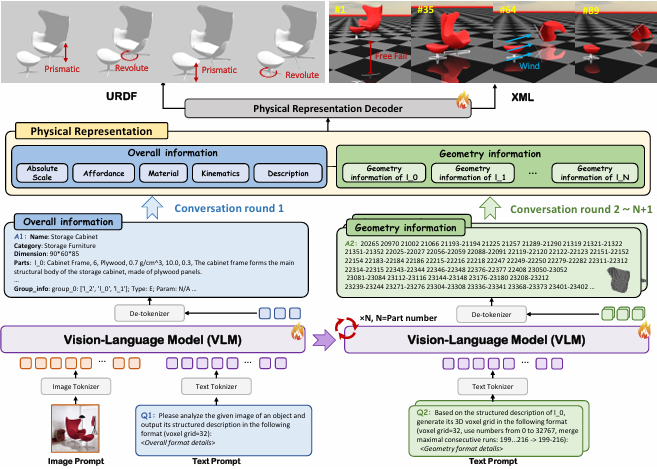

In robotic policy training, the lack of high‑quality simulated 3D assets has long been a bottleneck—manual modeling is time‑consuming, while traditional image‑generation approaches often prioritize visuals over physical properties, leading to motion distortion and collision errors once assets are imported into simulation environments. In November, the PhysX‑Anything framework, jointly open‑sourced by Nanyang Technological University and Shanghai Artificial Intelligence Laboratory, offered a new solution to this pain point: using only a single RGB image, it can generate complete 3D assets—including geometric structure, joint mechanisms, and physical parameters (mass, center of mass, friction coefficient, etc.)—that are ready to be imported directly into simulation platforms such as MuJoCo and Isaac Sim for robot training.

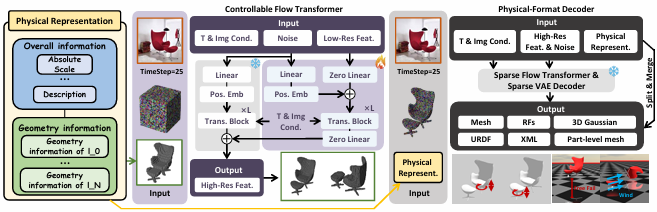

Three key technical designs balance physical realism and generation efficiency:

- Physics‑first coarse‑to‑fine pipeline: The framework first predicts an object’s overall physical properties (e.g., mass distribution, friction coefficient), then refines geometric structure and joint‑motion constraints at the component level. This logic avoids the physical inaccuracies common in vision‑focused generation—for example, it won’t shift the center of mass of a chair leg simply to match its appearance, which could cause tipping in simulation.

- Compact 3D representation: The object’s surface information, joint axes, and physical attributes are encoded into an 8K‑dimensional latent vector, enabling all information to be decoded in a single inference step. Compared to current state‑of‑the‑art methods, inference speed is increased by 2.3×, breaking through the real‑time generation efficiency bottleneck.

- Explicit physical supervision: The team augmented the dataset with 120,000 real‑world physical measurements (e.g., object center of mass, moment of inertia) and introduced loss functions for center‑of‑mass, inertia, and collision‑box errors during training. This end‑to‑end constraint—from data to training—ensures that the motion behavior of generated assets aligns with real‑world physics.

Validation: Accuracy and practicality both excel

On the two core metrics—Geometry‑Chamfer (geometric accuracy) and Physics‑Error (physical deviation)—PhysX‑Anything significantly outperforms recent methods such as ObjPhy and PhySG: geometric error is reduced by 18%, and physical error by 27%. Detailed results show that generated assets have an absolute scale error of less than 2 cm and joint‑motion‑range error under 5 degrees. Assets generated for IKEA‑style furniture, kitchen tools, and similar items can accurately simulate real‑world scenarios in Isaac Sim.

Real‑scene testing further highlights practicality: after importing the generated assets into Isaac Sim, robot‑grasping success rates increased by 12%, and training steps decreased by 30%—enabling direct use in policy learning without additional parameter tuning, substantially lowering upfront preparation costs.

Open‑source and future roadmap: expanding to dynamic scenes

The code, pre‑trained weights, dataset, and evaluation benchmarks for PhysX‑Anything are now available on GitHub. The team plans to release version 2.0 in the first quarter of 2026, which will support video input—allowing prediction of component trajectories over time from dynamic videos, thereby providing richer asset support for robot policy learning in dynamic scenarios (e.g., falling objects, tool manipulation).

0 Comment