What is Deep Learning?

Deep Learning is a specialized and powerful subset of Machine Learning, which itself is a branch of Artificial Intelligence (AI). It focuses on training computers to learn from vast amounts of data and perform complex tasks that typically require human-like perception and cognition. At its core, Deep Learning utilizes artificial neural networks—algorithms inspired by the structure and function of the human brain. These multi-layered networks learn to make predictions or classifications by automatically discovering intricate patterns and representations within data.

How Deep Learning Works

Deep Learning models function through a hierarchy of layers in an artificial neural network. Each layer processes the input data, extracts progressively abstract features, and passes its output to the next layer.

- Input Layer: This layer receives the raw data, such as pixel values from an image, words from text, or audio signals.

- Hidden Layers: These intermediate layers perform computations to identify and extract features or patterns from the data. Each layer builds a more complex representation based on the previous layer's output. The "depth" in Deep Learning refers to the number of these hidden layers.

- Output Layer: The final layer produces the result, such as a classification label (e.g., "cat"), a continuous value, or a sequence of words.

Connections between artificial neurons (nodes) have associated weights, which determine the strength of influence one neuron has on another. Initially random, these weights are systematically adjusted during training. This is done using an optimization process called backpropagation:

- The network makes a prediction.

- The output is compared to the correct answer (the "ground truth"), and an error (loss) is calculated using a loss function.

- This error is propagated backward through the network.

- An optimization algorithm (like Stochastic Gradient Descent) uses this error signal to update the weights, incrementally improving the model's accuracy.

This process requires large datasets of labeled data—where each input example has a known correct output. For instance, training a model to recognize handwritten digits requires thousands of images, each labeled with the correct digit (0-9).

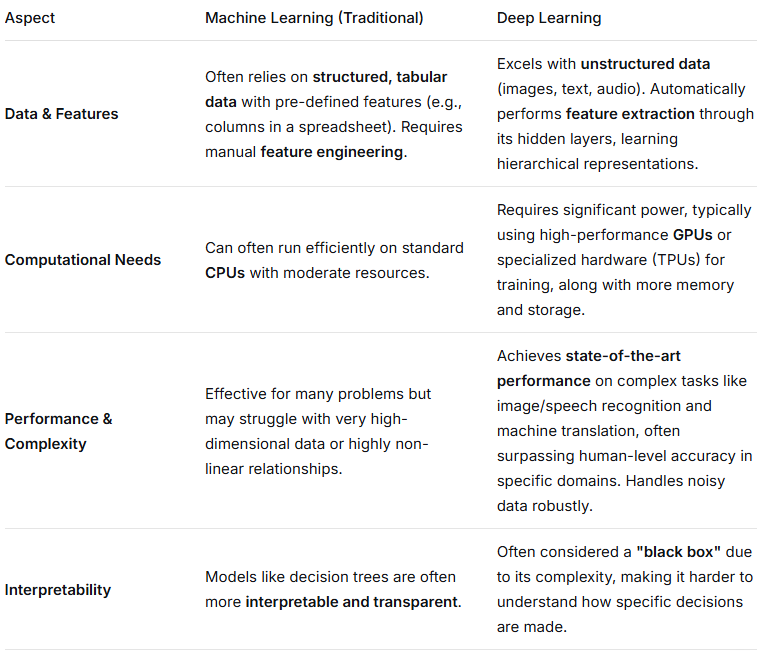

Deep Learning vs. Machine Learning: Key Differences

While both fall under AI, Deep Learning has distinct characteristics compared to classical Machine Learning:

Popular Deep Learning Frameworks

Frameworks are essential tools that abstract complex low-level operations, allowing developers and researchers to build, train, and deploy models efficiently.

- TensorFlow: An open-source platform developed by Google. Known for its production-ready, scalable architecture, and extensive ecosystem. Supports multiple languages and hardware (CPU, GPU, TPU).

- PyTorch: An open-source framework developed by Facebook's AI Research lab. Gained immense popularity for its dynamic computation graph, which offers greater flexibility and ease of debugging, making it a favorite for research.

- Keras: A high-level API that acts as an interface for TensorFlow (and others). Praised for its user-friendliness and simplicity, enabling rapid prototyping of standard neural networks.

- Other Notable Frameworks: PyTorch Lightning (a lightweight wrapper for PyTorch), JAX (from Google, gaining traction in research), and domain-specific frameworks.

Deep Learning Applications Transforming Industries

Deep Learning drives innovation across numerous fields by enabling machines to perceive, understand, and act with unprecedented accuracy.

- Computer Vision: Powers facial recognition, medical image analysis (e.g., detecting tumors in scans), autonomous vehicles (object detection), and industrial quality inspection.

- Natural Language Processing (NLP): Enables machine translation (Google Translate), sophisticated chatbots (ChatGPT), sentiment analysis, and text summarization.

- Speech Recognition & Generation: Forms the backbone of virtual assistants (Siri, Alexa), real-time transcription services, and realistic text-to-speech systems.

- Healthcare: Used for drug discovery, predicting patient outcomes from electronic records, and personalized medicine.

- Finance: Applied in algorithmic trading, fraud detection in real-time transactions, and automated risk assessment.

- Other Sectors: Optimizes supply chains, enables predictive maintenance in manufacturing, monitors crop health in agriculture, and enhances cybersecurity threat detection.

0 Comment