What is a Neural Network?

A Neural Network is a foundational computational model in artificial intelligence (AI) and machine learning, directly inspired by the structure and function of the human brain. At its core, it is a system of interconnected processing units called artificial neurons or nodes, organized in layers. These networks learn to perform complex tasks—such as pattern recognition, classification, and prediction—by discovering intricate relationships within data. Unlike traditional programming with explicit rules, neural networks learn through a process of trial and error, adjusting the strengths (weights) of connections between neurons based on vast amounts of training data to minimize errors and improve accuracy over time. This ability to learn from experience is what powers today's most advanced AI breakthroughs in computer vision, natural language processing, and beyond.

How Do Neural Networks Work?

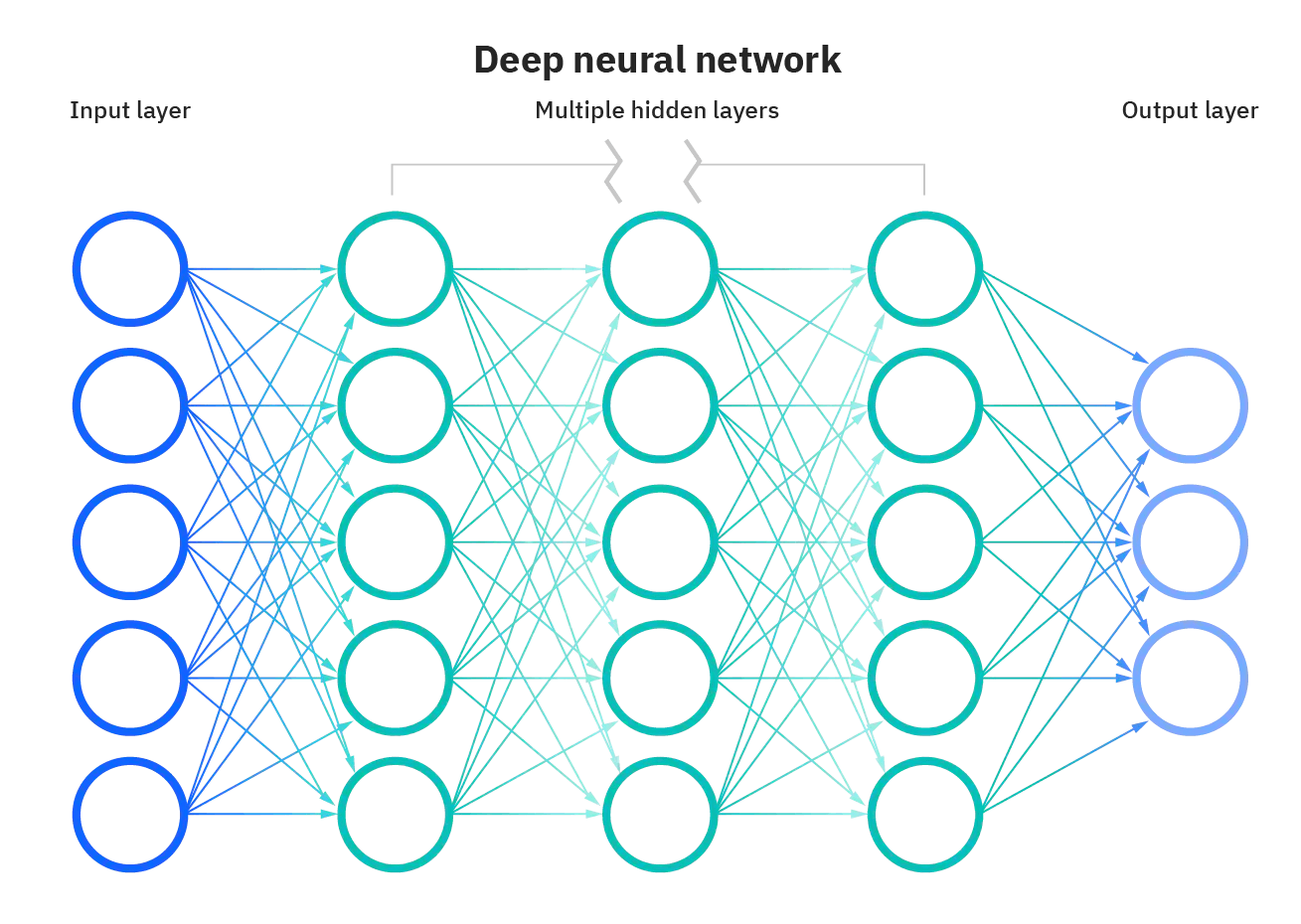

A neural network processes information through a series of connected layers:

- Input Layer: Receives the raw data (e.g., pixel values, words).

- Hidden Layers: One or more intermediate layers where the computation happens. Each neuron in these layers receives inputs, applies a mathematical transformation (involving a weight and a bias), and passes the result through an activation function (like ReLU or Sigmoid) to introduce non-linearity.

- Output Layer: Produces the final result, such as a classification label or a predicted value.

The magic lies in the learning process:

- Forward Propagation: Data flows from input to output, generating a prediction.

- Loss Calculation: The network's prediction is compared to the correct answer using a loss function.

- Backpropagation & Optimization: The error is propagated backward through the network. An optimizer (like Gradient Descent) uses this error signal to adjust all the weights and biases slightly, making the next prediction more accurate. This cycle repeats across thousands or millions of examples, allowing the network to build an internal, sophisticated mathematical model of the task.

Types of Neural Networks

Different architectures are designed for specific data types and problems:

- Feedforward Neural Networks (FNN): The simplest type, where information flows in one direction. Foundation for basic pattern recognition.

- Convolutional Neural Networks (CNN): The powerhouse for image and video analysis. They use specialized layers to automatically detect spatial hierarchies of features, like edges, textures, and objects.

- Recurrent Neural Networks (RNN): Designed for sequential data like time series, text, or speech. They have "memory" of previous inputs, making them ideal for translation, speech recognition, and time-series forecasting. Long Short-Term Memory (LSTM) networks are a popular, more advanced variant.

- Generative Adversarial Networks (GAN): A creative framework where two networks (a Generator and a Discriminator) compete to generate novel, realistic data, such as images or music.

Applications of Neural Networks

Neural networks are driving innovation across virtually every industry:

- Computer Vision: Facial recognition, medical image analysis for disease detection (e.g., tumors in X-rays), and enabling autonomous vehicles to perceive their environment.

- Natural Language Processing (NLP): Machine translation (Google Translate), intelligent chatbots (ChatGPT), sentiment analysis, and voice-activated assistants (Siri, Alexa).

- Predictive Analytics: Forecasting stock trends, energy demand, equipment failures (predictive maintenance), and sales.

- Recommendation Systems: Personalizing content on Netflix, Spotify, and Amazon by predicting user preferences.

- Finance: Detecting fraudulent transactions and assessing credit risk in real-time.

Advantages of Neural Networks

Their strengths make them superior for many complex tasks:

- Learning Complex Patterns: They excel at modeling highly non-linear and intricate relationships in data that are impossible to code manually.

- Adaptability: They can continuously learn and improve from new data.

- Robustness: They can often produce reliable results even with noisy or incomplete real-world data.

- Versatility: A single architecture can be adapted to a wide range of problems across different domains.

Limitations and Challenges

Despite their power, neural networks come with significant considerations:

- "Black Box" Nature: Their decision-making process is often opaque, making it difficult to interpret why a specific prediction was made—a critical issue in fields like healthcare or justice (Explainable AI is a growing field to address this).

- Data Hunger: They typically require massive amounts of high-quality labeled training data, which can be expensive and time-consuming to acquire.

- Computational Cost: Training large, deep networks demands significant processing power (GPUs/TPUs), time, and energy.

- Overfitting: A model may become overly specialized to its training data, performing poorly on new, unseen data. Techniques like regularization and dropout are used to combat this.

- Lack of Generalization: A model trained for one specific task often fails to perform well on a slightly different task without extensive retraining.

In conclusion, neural networks are a transformative technology that forms the backbone of the current AI revolution. Understanding their principles, capabilities, and constraints is essential for leveraging their potential responsibly and effectively across science, business, and society.

0 Comment