Ollama

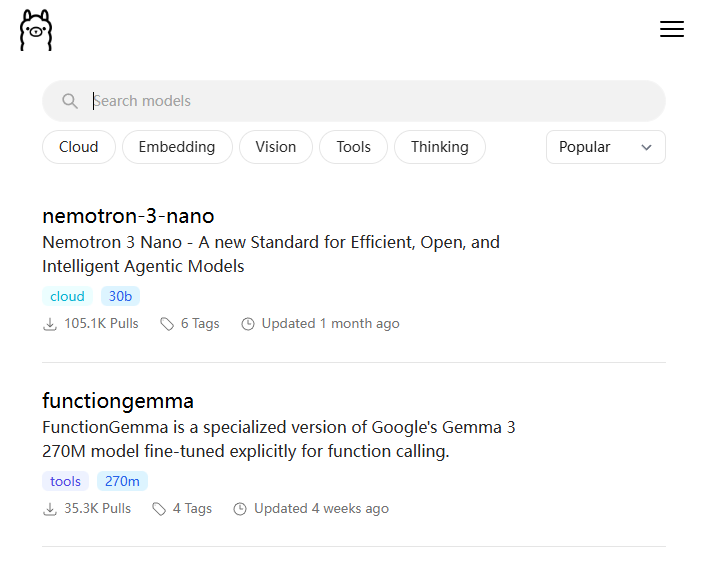

Ollama is an open-source AI platform that lets users run, manage, and deploy large language models (LLMs) locally on their own devices or optionally scale into the cloud. Designed for developers, researchers, and privacy-conscious creators, Ollama provides a flexible CLI, REST API, and SDK integrations for macOS, Windows, and Linux, enabling control over model selection, customization, and offline inference without relying on third-party cloud services.

Introduction to Ollama

Ollama is a developer-focused platform that simplifies working with open-source large language models by allowing them to run locally on your machine or via optional cloud resources. It abstracts the complexity of model setup and execution, giving users a consistent experience across environments while prioritizing data privacy and offline capability.

Key Features of Ollama

- Local and Cloud Deployment: Run LLMs entirely on your own hardware for maximum privacy, or use Ollama Cloud for scalable, high-performance inference.

- Model Library and Compatibility: Access a growing library of open models including LLaMA, Gemma, Mistral, and more, with support for community formats like GGUF and safetensors.

- CLI and API Access: Interact with models via an intuitive command-line interface or integrate them into applications using Ollama’s REST and language SDKs like Python and JavaScript.

- Custom Modelfiles: Create reproducible model configurations with modelfiles that define prompts, parameters, and system messages—ideal for tailored AI workflows.

- Multimodal Support: Work with both text and image prompts depending on chosen models, enabling richer use cases in content creation and analysis.

- Privacy-First: Local execution keeps data on your device by default, reducing exposure to external servers or cloud log retention.

How Ollama Enhances AI Development

Ollama bridges the gap between powerful open-source AI models and practical application development by giving teams and individuals tools to experiment, prototype, and deploy models without recurring API costs or cloud dependencies. Its flexible architecture accommodates development workflows, RAG systems, chatbots, and embedded AI services with consistent local performance.

Who Should Use Ollama?

Ollama is ideal for:

- Developers & Engineers: Build and test AI applications locally with minimal setup.

- Privacy-Focused Users: Keep sensitive data on-device rather than in cloud APIs.

- Researchers: Experiment with different open models and configurations.

- Product Teams: Deploy AI features into apps with flexible backend control.

Why Choose Ollama?

Ollama stands out by offering a local-first, open-source approach to LLM deployment that balances control, performance, and privacy. It removes typical barriers associated with cloud-only AI development and lets users run powerful models on standard machines while still supporting cloud scaling when needed.

0 Comment